Open RAN by disaggregation involves CU (Centralized Unit) and DU (Distributed Unit) virtualization. By decoupling hardware and software, Open RAN makes it possible to select different vendors and solutions for hardware and software and to manage their lifecycles separately. More specifically, this makes it possible to use COTS (Commercial off-the-shelf) general-purpose hardware in the RAN and avoid proprietary appliances.

COTS Hardware is not always the most efficient solution. It creates a potential vendor lock-in due to the omnipresent Intel-based x86 architecture. This is different from the vendor lock-in in the traditional RAN because COTS hardware has a more limited role than the proprietary appliances in a traditional RAN, but it still limits the choice and flexibility of operators. So COTS Hardware needs more hardware!

Change is underway with silicon vendors entering the market with Accelerators (e.g., Qualcomm, Marvell, Xilinx, Nvidia, and Intel) and SoC (e.g., Picocom) devices that are specifically designed and optimized for Layer 1 processing in the RAN. COTS remains the hardware of choice for the less intensive processing above Layer 1. Layer 1 processing is mostly limited to the O-DU and is the most complex and time-sensitive in wireless networks. COTS hardware is not optimized for this type of processing and, hence, is not as efficient as dedicated hardware.

Layer 1 processing at the Distributed Unit (DU) Layer 1 plays a pivotal role in orchestrating complex algorithms including Forward Error Correction (FEC), channel estimation, modulation, and layer mapping. Accelerator cards bridge the gap between these intricate tasks and the limitations of conventional General-Purpose Processors (GPPs).

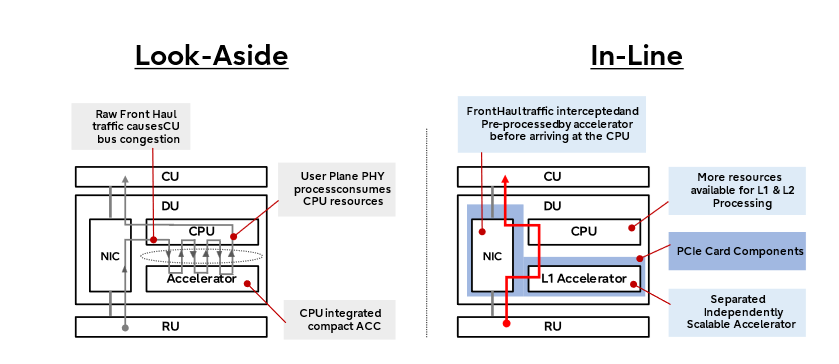

There are two main types of accelerator architectures: inline and look-aside. Each architecture employs accelerator cards that serve different functions.

- Inline Layer 1 accelerator: In contrast, inline architectures intercept Layer 1 processing for user plane data before it reaches the CPU. Inline Layer 1 accelerator cards can efficiently handle almost all Layer 1 processing tasks, freeing up CPU resources for Layer 2 and Layer 3 processing.

- Lookaside FEC accelerators: In the lookaside architecture, the CPU acts as the master controller for Layer 1 processing, with an accelerator card, often a separate PCIe card, handling select functions like FEC. However, many real-time computations continue to be processed by the CPU, including Layer 2 and Layer 3 processing.

The principal difference between them is that in look-aside acceleration, only selected functions are sent to the accelerator, and then back to the CPU, while in inline acceleration parts of or the whole data flow and functions are sent through the accelerator. For higher bandwidth open RAN applications, full L1 processing offload may be required. In this case, an inline hardware accelerator is likely to deliver the best results with the lowest latency and minimum cores required.

Lookaside accelerators are less efficient but allow the COTS server to decide which functions should be sent to the accelerator. Inline accelerators use a simpler interface to the COTS server and process all Layer 1 functions.