Introduction

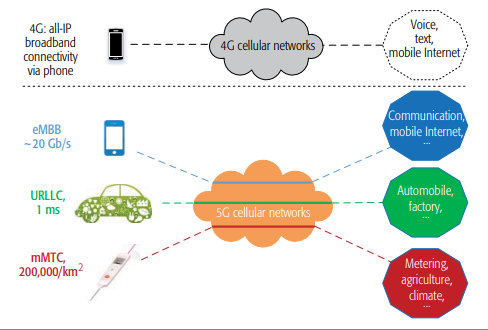

The application of 5G wireless communication systems is expected to begin in 2020. With new projections, new technologies, and new network architectures, traffic management for 5G networks will show significant technical challenges.

In recent years, AI technologies, especially ML technologies, have shown considerable success in many application domains, suggesting their future potential to solve the problem of 5G traffic management.

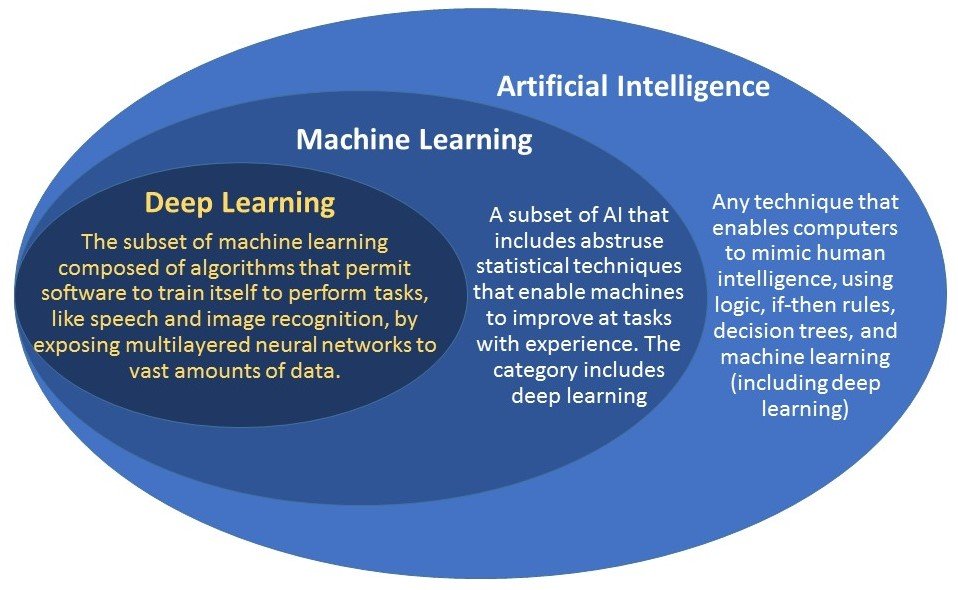

The Concept of AI

AI is the science and engineering of making machines as intelligent as humans and has long been applied to optimize communication networks in diverse configurations.

According to the scope of intelligence, AI could be divided into two levels:

- The first and basic level of AI is that one machine or entity can provide multiple pre-defined options and respond to the environment in a different deterministic manner. For example, 5G will allow granted

and grant-free transmission for eMBB and mMTC services, respectively. In other words, the network

will intelligently set a new configuration after detecting different pre-defined service indicators. - The second and complete level of AI is that one machine or entity possesses the full capability to interact (e.g., sense, mine, predict, and reason) with the environment. More importantly, the machine or entity is able to learn how to make appropriate responses, even when it faces strange scenarios or tasks.

Artificial Intelligence for 5G Network

5G networks provide a great promise for network operators to better serve their customers with higher speeds, better performance, and more data. But they’ll be extremely complex to manage, given the massive amounts of data they are expected to deliver, reliably and securely, in real-time, to not only mobile phones, but also millions of different IoT devices, and home set-top-boxes.

Artificial Intelligence (AI) and Machine Learning (ML) have recently received much attention as key enablers for managing future 5G networks more efficiently.

Why AI Is Important for 5G Network?

Due to the development of smarter 5G networks, it will be feasible to provide customized end-to-end network slices (NS) to simultaneously satisfy distinct service requirements, such as ultra-low latency in URLLC and ultra-high throughput in eMBB.

Meanwhile, new features such as the dynamic air interface, virtualized network, and network slicing introduce complicated system designs and optimization requirements to address the challenges related to network operation and maintenance.

Fortunately, such problems can be considered in the field of artificial intelligence (AI), which provides brand new concepts and possibilities beyond traditional methods. Therefore, AI has recently regained attention in the field of communications in both academia and industry.

Fortunately, such problems can be considered in the field of artificial intelligence (AI), which provides brand new concepts and possibilities beyond traditional methods. Therefore, AI has recently regained attention in the field of communications in both academia and industry.

Research Directions for AI in 5G

AI can be broadly applied in the design, configuration, and optimization of 5G networks. Specifically, AI is relevant to three main technical problems in 5G:

- Combinatorial Optimization: For instance, network resource allocation problem. In a resource-limited network, an optimized scheme must be considered for the allocation of resources to different users who share the network such that the utilization of the resource achieves maximum efficiency. As an application of the HetNet architecture in 5G NR with features like network virtualization, network slicing, and self-organizing networks (SON), network resource allocation problems are growing in complexity and require more effective solutions.

- Detection: The design of the communication receiver is an example of the detection problem. An optimized receiver can recover the transmitted messages based on the received signals, achieving a minimized detection error rate. Detection will be challenging in 5G within the massive MIMO framework.

- Estimation: The typical example is the channel estimation problem. 5G requires an accurate estimation of the channel state information (CSI) to achieve communications in spatially-correlated channels of massive MIMO. The well-known approach includes the training sequence (or pilot sequence), where a defined signal is transmitted and the CSI is estimated using both the knowledge of the transmitted and received signals.